Prediction of an impending AI revolution

By Ajith Ram October 12, 2016

- Current chip manufacturing tech expected to last for years

- Fully self-driving vehicles expected within five to ten years

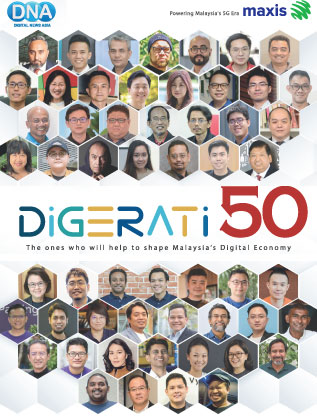

David Kirk is an Nvidia Fellow and served from 1997 to 2009 as Nvidia's chief scientist. Kirk received the Distinguished Alumni award from the California Institute of Technology in 2009.

Prior to coming Nvidia, he served from 1993 to 1996 as chief scientist and head of technology for Crystal Dynamics, a videogame company. Kirk has more than 60 patents and patent applications relating to graphics design and has published more than 50 articles on graphics technology.

DNA caught up with David Kirk for an interview at the recently concluded GTCx conference in Melbourne.

DNA: At our GTC meeting, you said that you expect CMOS-based chips to be around for a long time. What are your thoughts on the current state of EUV R&D?

I base my expectations on continuing demand for CMOS chips, not on any particular R&D technology. To paraphrase Mark Twain, the rumours of the demise of Moore’s Law are greatly exaggerated. In the past, the physics of Dennard scaling provided us with reduced power and increased clock speed as feature size decreased. Although we no longer get all of the benefits of Moore’s Law scaling, we do continue to get more transistors each year. Demand for more chips may also drive down cost, alongside volume.

DNA: What are the key advantages of CUDA over OpenCL?

CUDA is part of a thriving ecosystem with over 400,000 active developers, and close to 1 billion CUDA-capable parallel processors. There are also many domain-specific libraries that are GPU-accelerated using CUDA. Every major Deep Learning and Neural Network framework is GPU-accelerated using CUDA. We recently announced TensorRT, which is an optimised inference engine accelerated using CUDA. We are passionate about making CUDA on GPUs a great platform for developers.

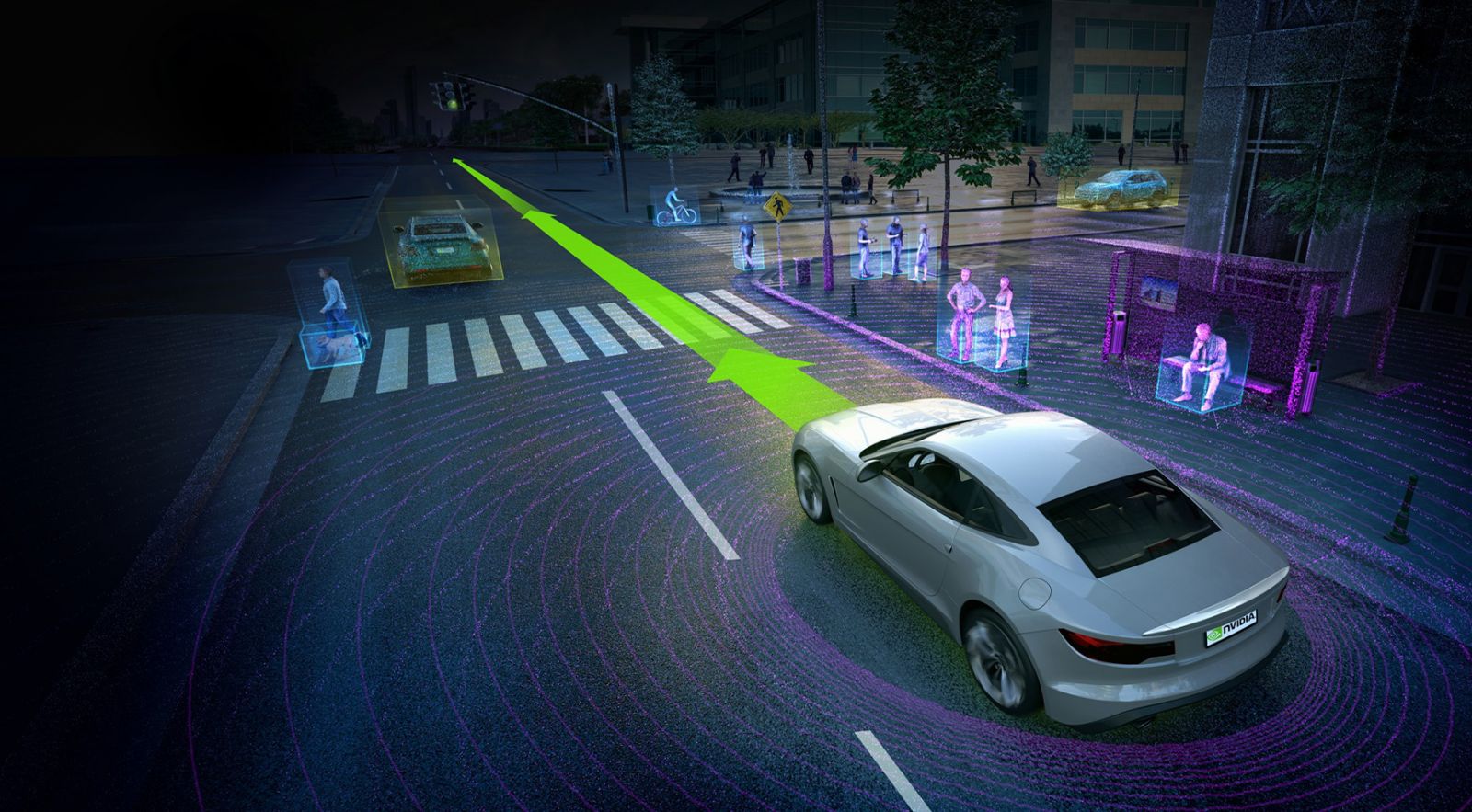

DNA: In your keynote, you mentioned that most of the key pieces for a self-driving car is falling into place. What are the key challenges remaining?

One of the big remaining challenges is putting all of the pieces together. An autonomous vehicle must be capable to self-driving, navigation, and sensing the environment without fail, to an exceptionally high degree of reliability. If the vehicle cannot independently determine what to do and where to go, it must stop. So, every piece must work together flawlessly. We are working on all of these pieces, with many partners.

DNA: Do you think we will see fully autonomous cars on the road in the next 10 years - without a driver inside at all?

Absolutely. ADAS systems are already providing a great measure of improvement in safety in many vehicles. There are also many efforts to provide fully autonomous vehicles within five years. Surely some of these will succeed. We are committed to be part of this technology revolution.

DNA: We are now seeing GPUs with HBM memory as well as traditional DDR5 memory modules. Do you think HBM will ever be cheap enough to completely displace traditional memory?

It’s interesting that you refer to DDR5 as traditional; it’s relatively new and not inexpensive. There is always a market mix between cheap commodity memory technology and specialised high-speed memory for more demanding applications. The various flavors of DDR have in the past been part of that more demanding mix of requirements and have gradually moved to be more mainstream. I expect that as the use of HBM increases, costs may come down and it may come into more mass market use. Time will tell.

DNA: What are the key challenges in inferencing that you expect to be solved in the next 5 years?

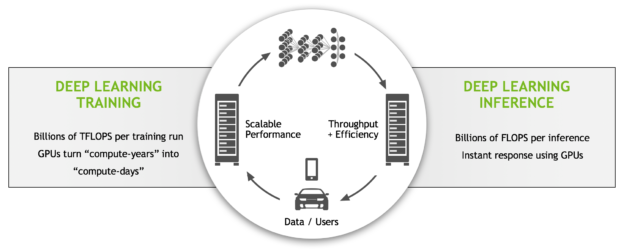

Inferencing is rapidly becoming a core workload in datacenters and devices. Very soon, every image, audio clip, and video clip received on any device or uploaded to any cloud storage service will be automatically analysed and tagged. One of the biggest challenges will be to efficiently process this workload in a distributed fashion on a variety of devices and locations. IOT will drive more embedded processing, which will demand higher performance on every device. GPU-based SOCs, such as the recently announced Xavier SOC, can be part of many smart devices. GPUs are already a core component of datacenter inferencing.

DNA: What architectural advantage does a GPU have over a co-processor like Xeon Phi?

GPUs are processors that are purpose-built for parallel processing. The parallelism in GPUs has been driven by the immensely parallel workload of 3D graphics. GPU architecture has been developed to provide many parallel execution threads that can execute independently or collaboratively, and datapaths that can be shared or separate.

This highly parallel architecture is also ideal for many other applications, including Deep Learning and Inferencing. Xeon Phi is fundamentally a CPU, with a small number of higher speed threads. This is a poor architecture for highly parallel workloads. The early versions of the Xeon Phi architecture were intended to compete with GPUs in 3D graphics, but were unsuccessful.

DNA: When do you expect real-time ray tracing in videogames?

Real-time ray tracing could now be incorporated into videogames, but it’s not likely to provide value outside of traditional ray tracing strengths in the areas of reflections and shadows. Ray tracing could also provide benefits in VR or AR, where foveated rendering (more samples near the center of focus and fewer in the periphery) can improve efficiency and image quality.

Related Stories:

AMD unveils world’s first hardware-virtualised GPU line

Nvidia accelerates self-driving vehicle, AI research

Nvidia announces new graphics card

Review: Nvidia GeForce GTX 1060, the new mainstream champion

For more technology news and the latest updates, follow us on Twitter, LinkedIn or Like us on Facebook.